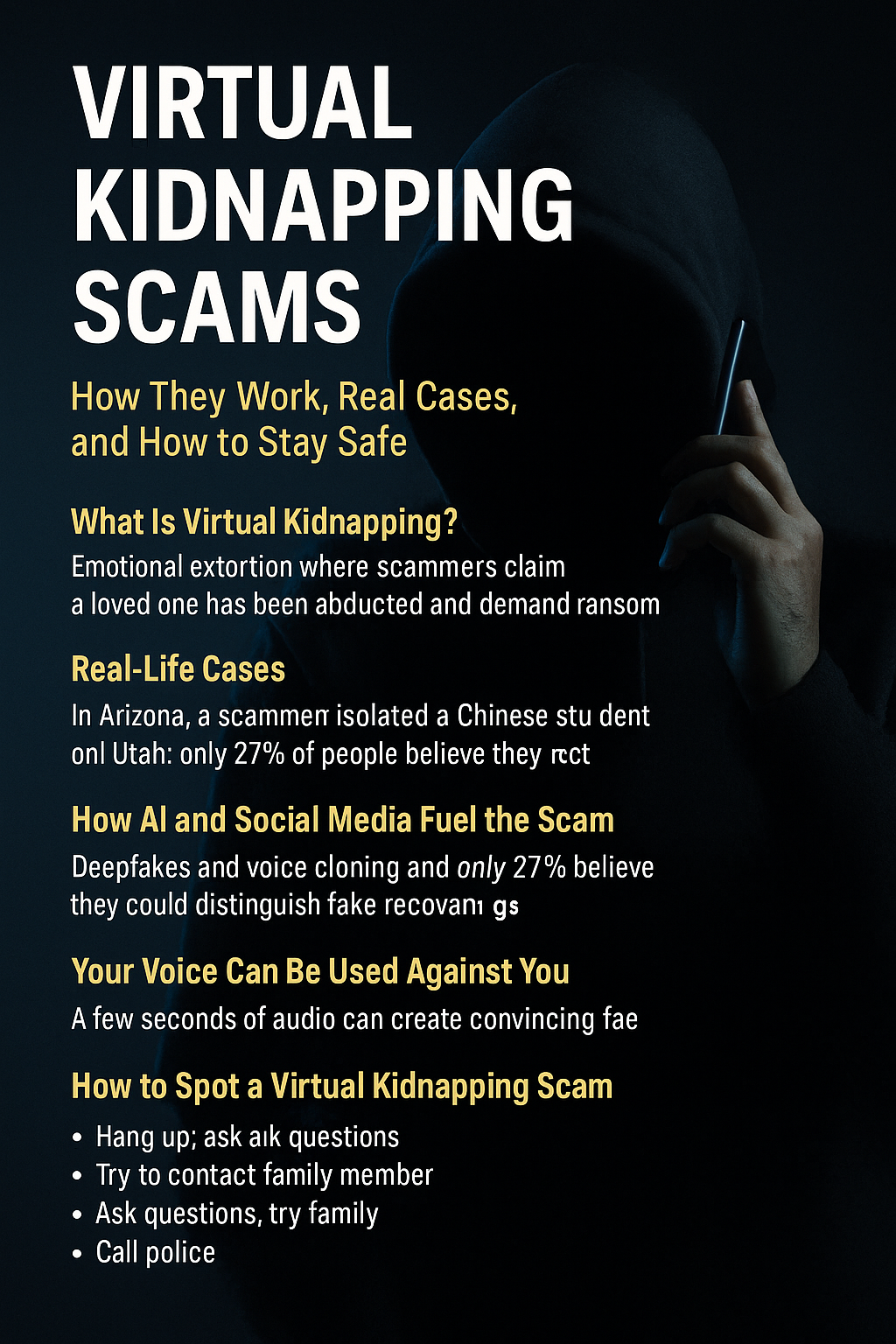

Real Cases, and How to Stay Safe in 2025

Virtual kidnapping scams are among the most terrifying modern crimes, using technology and emotional manipulation to exploit fear. Getting a sudden phone call claiming your child or spouse has been kidnapped sends a bolt of panic through any parent’s heart. It’s a nightmare scenario—and scammers are betting on that reaction.

These high-pressure scams use fake voices, artificial intelligence, and personal information pulled from your own social media to make their threats seem real. In this guide, we’ll explain what virtual kidnapping is, share real-life cases, explore how criminals use AI and social media, and—most importantly—show you how to protect yourself and your family.

What Is Virtual Kidnapping?

Virtual kidnapping is a form of psychological extortion. Instead of actually abducting someone, scammers pretend a family member has been kidnapped. They use technology to fake a voice, generate background noise (like crying or screaming), and threaten violence unless a ransom is paid—often immediately.

The scammers use a mix of:

AI-generated voices that sound eerily real

Social media details to build credibility

Spoofed caller IDs to look like local or familiar numbers

These elements combine to create a frighteningly believable scam that can push victims into paying a ransom without ever verifying whether their loved one is truly in danger.

Real-Life Virtual Kidnapping Cases That Show the Danger

💔 Case 1: Arizona Mom Targeted with AI-Generated Voice of Daughter

In one chilling incident in Arizona, a mother received a call from an unknown number. The voice on the other end sounded exactly like her 15-year-old daughter—crying, begging for help. Then, a man took the phone and demanded a $1 million ransom.

Although the mother was able to confirm her daughter was safe at a dance rehearsal, the trauma and panic were all too real. The event was so alarming that it was later shared in testimony before the U.S. Senate Judiciary Committee, highlighting how AI is being weaponized by scammers.

❄️ Case 2: Chinese Exchange Student Isolated and Exploited

In Utah, scammers convinced a Chinese exchange student to isolate himself in a remote location, claiming that his family was at risk unless he cooperated. They coerced him into taking photos that looked like he had been kidnapped—photos that were later used to extort $80,000 from his panicked parents overseas.

This case shows the lengths scammers will go to manipulate victims, especially when cultural or language barriers are involved.

How AI and Social Media Fuel These Scams

📱 AI Voice Cloning Makes Scams More Believable

Today’s AI tools can replicate a person’s voice from just a few seconds of audio. If your voice—or your child’s—is online in a YouTube video, TikTok clip, Instagram Story, or even a voicemail, it can be harvested and used to create fake but realistic audio.

According to a recent McAfee report, only 27% of people believe they could tell the difference between a real and AI-generated voice. That means 73% are vulnerable to being emotionally manipulated.

🔍 Where Do Scammers Get the Info?

Most of the information comes from public social media profiles. Many users unknowingly overshare online—posting:

Children’s names and school information

Travel plans and vacation check-ins

Pet names and personal nicknames

Voice recordings or video clips

All of these seemingly harmless pieces help scammers build a fake but believable scenario. With AI, they can even simulate background noise like crying or struggling to make the scenario sound authentic.

🧠 “AI-Driven Compute Power” Is Now in Criminal Hands

“Bad actors now have access to powerful tools that weren’t previously available, leveraging AI-driven compute power to gather intelligence at scale,” said Arun Shrestha, CEO at BeyondID.

Criminals no longer need complex hacking skills—they can use free or low-cost AI tools to generate content, fake identities, and even deepfake videos.

Your Voice Can Be Used Against You

You may not realize it, but every time you post a video or audio clip, you’re giving criminals material. With just 3–5 seconds of clean audio, scammers can train voice-cloning software to mimic you or your family.

Even short videos of your child’s birthday, a vacation vlog, or a Zoom call posted online can be weaponized. Imagine getting a call where your child appears to be crying and screaming for help—when it’s really a deepfake.

These voice attacks are often paired with threats from altered voices using voice changers or AI enhancements to make the caller sound more dangerous and intimidating.

How to Spot a Virtual Kidnapping Scam: 8 Essential Tips

Here are eight critical steps to follow if you ever get a suspicious kidnapping call:

Hang up immediately if something doesn’t feel right. Scammers use urgency to keep you off balance.

Call or message your loved one on another device to confirm their safety.

Ask specific personal questions only your real family member would know—like childhood pets or inside jokes.

Don’t speak too much. The more you say, the more the scammer can use against you.

Avoid sending money, gift cards, or cryptocurrency. Real authorities do not demand ransom payments.

Do not give out personal info like your address or family members’ names during a suspicious call.

Take a breath and slow down. Ask the caller to repeat themselves or clarify their demands to buy time.

Call local police immediately. Law enforcement is familiar with these scams and can help assess the risk.

How to Protect Your Family from Virtual Kidnapping Scams

Prevention is your best defense. Here’s how to reduce your risk:

Set your social media accounts to private.

Avoid posting real-time location updates.

Limit how much of your child’s identity is visible online.

Use parental controls and educate teens about online privacy.

Avoid accepting friend requests from strangers.

Post less about your daily routines or future travel plans.

You can also set up a family “safe word”—a phrase only your inner circle knows, to verify someone’s identity during emergencies.

The Future of Scams: Why Virtual Kidnapping Is Likely to Rise

Virtual kidnapping scams represent a terrifying blend of technology, manipulation, and crime. As AI becomes more accessible and advanced, we can expect these scams to become:

More common

More convincing

Harder to detect

Even cybersecurity experts struggle to detect well-done AI impersonations. The gap between truth and fabrication is shrinking—and scammers are exploiting that.

Final Thoughts: Stay Alert, Stay Calm, and Stay Private

Virtual kidnapping scams feed on fear. But knowledge, awareness, and calm thinking are your best defense. As technology evolves, so must our defenses and understanding of the new digital threats out there.

👨👩👧👦 Talk with your family about this type of scam.

🧠 Educate your children about online safety.

🔐 Lock down your social media.

🛡️ Trust your instincts—if something feels off, it probably is.

If you found this article helpful, share it to protect others—and consider exploring platforms like Wealthy Affiliate to build a legitimate, ethical online business while learning how to stay safe online.

Our website contains affiliate links. This means if you click and make a purchase, we may receive a small commission. Don’t worry, there’s no extra cost to you. It’s a simple way you can support our mission to bring you quality content.”

Virtual kidnapping scams are incredibly terrifying because they prey on fear and urgency, making victims believe their loved ones are in immediate danger. This article sheds much-needed light on how these scams operate and why staying calm, verifying facts, and not acting impulsively are crucial steps to avoid falling into the trap. With the rise of digital communication, it’s alarming how scammers can manipulate caller ID and use personal information to sound convincing. Raising awareness about these tactics is essential to help others recognize red flags and respond wisely in high-pressure situations.

Thank you so much for your heartfelt and thoughtful comment. You’re absolutely right — virtual kidnapping scams are among the most emotionally devastating because they exploit our deepest fears. It’s chilling how easily scammers can manipulate technology and personal details to sound convincing, especially when someone’s loved one is involved.

I’m really glad the article resonated with you and helped shine a light on how these scams work. As you said, staying calm, verifying the facts, and pausing before reacting can truly be lifesaving. The more we share this kind of awareness, the better chance we have of protecting others from falling victim.

Your words contribute to that mission — so thank you again for taking the time to reflect and share. Stay safe and informed, and never hesitate to pass this knowledge on to others who may need it.